FOSDEM 2025 has exceeded my expectations

This weekend, I participated in FOSDEM 2025, which impressed me more than I had imagined. Boy, was it big—over 1,000 talks! Here are a few notable takeaways:

Apache Arrow is a top dog, with a turbulent future

The first panel I attended on day one was Apache Arrow’s. It felt surreal to meet the contributors face-to-face—they all seemed like genuinely nice people who simply know their niche very well.

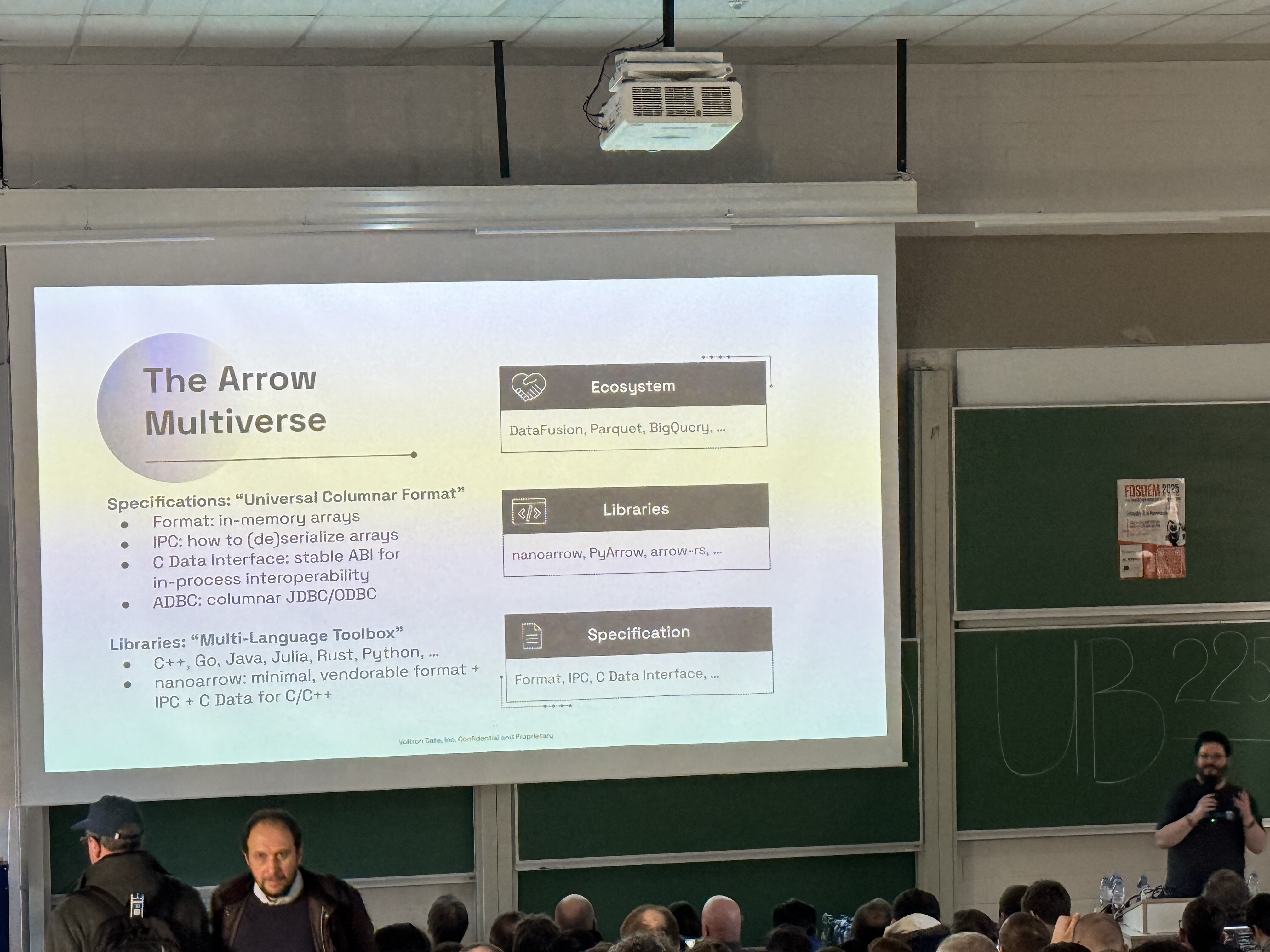

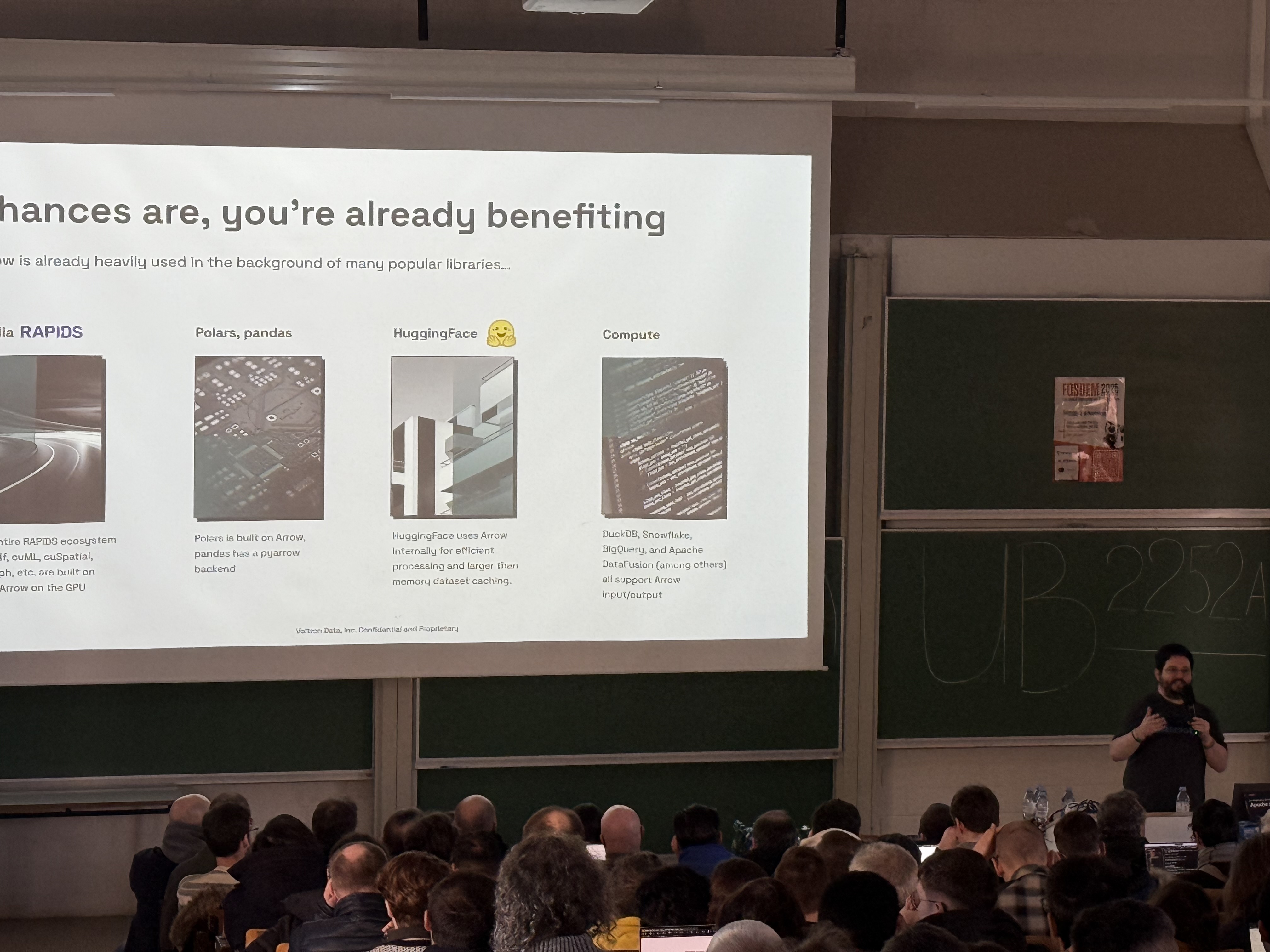

I’ve been aware of Arrow for a few years, and this panel only reinforced my confidence in it as the standard in-memory analytics data format for interoperability between languages, processes, and systems. With identically laid-out bytes in memory (based on their spec) for Python, Go, Rust—heck, even Java (crazy, right?) — you can operate on the same shared memory block across different processes and even different stacks without any serialization or deserialization. This is why DuckDB can pass data to Pandas without any overhead, why Spark (with the Arrow option enabled) can pass data to Pandas without any overhead, and why Polars can do the same.

An additional benefit is that it’s columnar, meaning bytes are laid out in a way that allows highly efficient operations on single or multiple columns (e.g., sum, count, etc.). As an example, Polars leverages it as a in-memory storage format with great success.

→ Arrow enables different languages, processes, and systems do work on and communicate at maximum speed — think of it as the English language for software.

Another cool application they mentioned was using Arrow at Hugging Face: efficiently handling data larger than memory for LLMs with their Datasets library. We all know how to stream data from disk when RAM is limited - implementing reading in batches - but with Arrow, you can dump memory bytes to disk 1:1 (pure bytes without any changes) and memory-map the disk to “pretend” it’s RAM — kind of similar to swapping - but with efficient read-in since no deserialization occurs. So, basically, you dump bytes into a .arrow file (with PyArrow), straight into /tmp/, and then mmap, which is much faster and less code than doing the same with Parquet files. Extremely useful for loading datasets larger than memory in a convenient fashion.

A bit of drama — Voltron Data, the main contributor to Arrow, was hit by layoffs last year. The majority of its staff had to find other jobs, meaning a significant portion of Arrow maintainers left. People expressed their commitment to finding ways to support the Arrow project (e.g., through foundations) to ensure its continued maintenance, but they seemed quite concerned nonetheless.

With Arrow, nothing prevents us from implementing a dataframe library in Go, right?

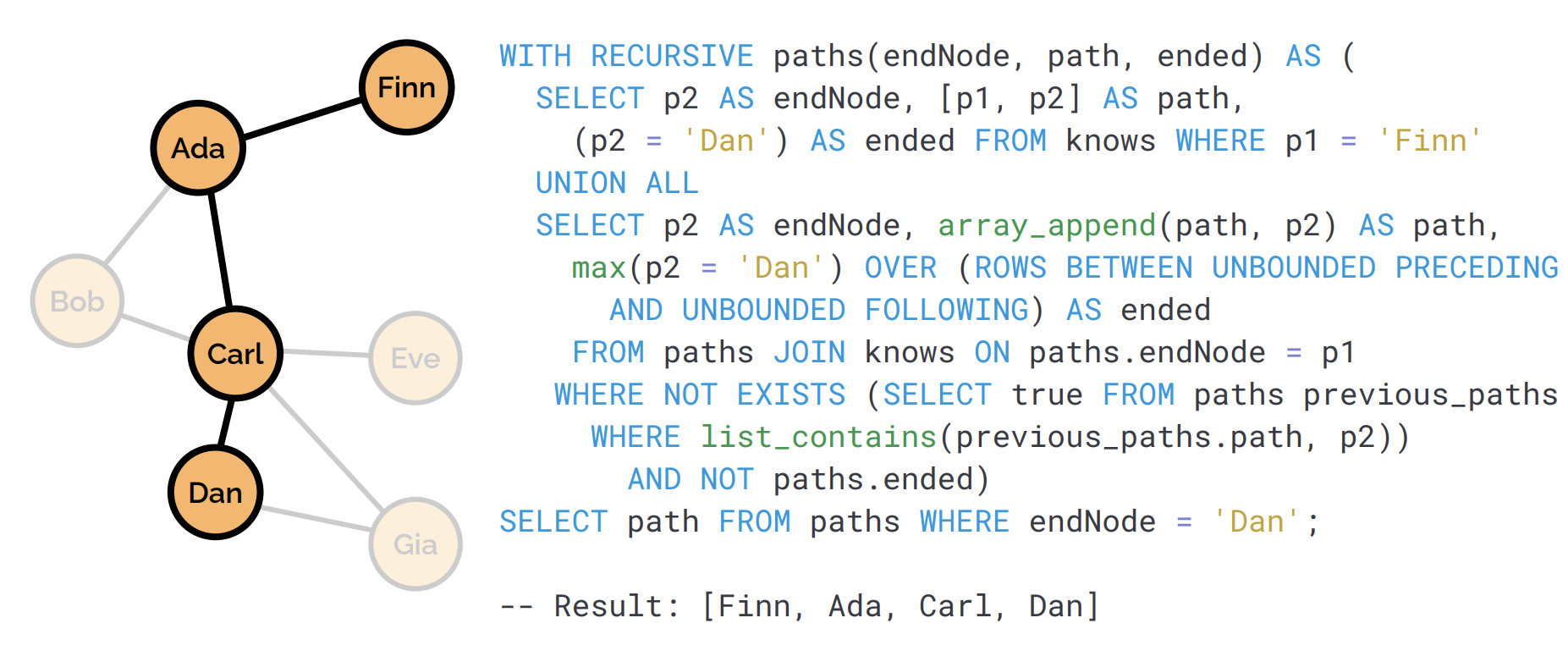

Graph databases will not conquer the world, but they have their niches

Great historical talk by one of DuckDB’s developer advocates, Gábor Szárnyas, who is apparently a reputable graph database researcher as well! Key takeaway: graph databases excel at nested/recursive multi-join problems because (a) writing SQL for something like the shortest path quickly turns into a mess of LISTAGG, multiple joins, etc., and (b) they leverage factorization under the hood—flattening certain columns into lists to reduce joins (e.g., storing a person’s friend list as a list instead of separate rows). This makes them dominant in this niche.

However, graph DBs won’t replace RDMS - they are neither faster (usually since they are schemaless), nor better supported (very fragmented landscape due to overfitting to specific use-cases).

On a positive note, his group has made massive leaps on a graph DB benchmarking suite, which should move the field forward either way.

Opentelemetry + | insert your favorite OLAP engine | is the way to go

There was a talk from a Clickhouse developer advocate, who once more preached Clickhouse for telemetry. He had a point though.

I don’t have years of SRE experience to make any strong recommendations here, but having dabbled a bit with the classic Prometheus + Grafana setup, I’ve noticed two issues:

- PromQL is a terrible language—hard to read and write, especially compared to SQL.

- Prometheus time-series DB is relatively slow, given the sheer amount of data it has to process.

- Prometheus instrumentation client is okay, but why lock yourself into Prometheus when you can use OpenTelemetry and switch between different backends?

OK, you may have to set-up some things e.g. a UI, but it’s not the hard part i.e. you can set-up Granana with some SQL sources e.g. the Clickhouse, and you’re good to go.

From my perspective, it’s just that we have ingrained habits of setting up these telemetry stacks. I have to agree with the overly excited ClickHouse presenters: the future telemetry stack is OpenTelemetry (to reduce vendor lock-in) combined with any real-time OLAP database, like ClickHouse. Sums, counts, percentiles, etc., are OLAP’s bread and butter—typically all one- or multi-column operations.

Will benchmark sometime soon. This entire area can be IMHO disrupted™.

Wasm will not replace containers, but it is the definition of cool

Dan Phillips showcased a pretty cool project he’s been developing, which essentially takes a Dockerfile, runs box build -f Dockerfile, and produces a WASM binary that can even run in a browser. In general, this results in much lower startup times and better isolation, which I definitely agree with.

Here’s the catch: no, containers are not disappearing. WASM has slower runtime performance because the WASM runtime acts like a VM executing WASM assembly-like bytecode. They are reimplementing every syscall to match the same interface, which requires an astonishing amount of effort. On top of that, they’re reimplementing every Dockerfile command.

Nonetheless, it’s a cool project, the author seems like a great enthusiastic engineer, and I can see it being used for short-running FAAS (short runtime, but the syscalls are the real challenge).

CNCF is a big deal

To be fair, I’d never heard of CNCF, but they’re such a professional non-profit and the parent company of Kubernetes, Prometheus, and many other projects. They shared some thoughts on how they’re not too concerned if some individual contributors disappear for reasons like family commitments, but the bigger issue arises when projects are maintained by a single entity that can just reorganize overnight. Apache Arrow, is that you?

Keep up the good work, CNCF!

Distributed Systems are (again) notoriously hard to build

“Was Leslie Lamport Right?” — that’s the title of the main talk in the distributed systems track. Apparently, the legendary analyst and educator in distributed systems even before he had a distributed system at home. I learned a bit about Lamport clocks and how distributed systems sync in discrete and relative time, not wall-clock time. It made me think that offloading as much state as possible to S3 might not be such a bad idea, and that building one should be (and remain) a highly rewarding side project.

FSFE is also a big deal

Massive respect for the folks who have been advocating for nearly 20 years for extendable and replaceable software. Although the presenter stirred the pot a bit with the audience — some folks didn’t buy the idea that mobile device software is not modifyable — let’s take Apple, FSFE’s current target - sure, you can change iOS into something open-source, but that’s only feasible if you’re a 10x hobbyist engineer, not regular folk IMHO. Best of luck to them!

Conclusion

The conference beat to dust every commercial conference I have ever been to. It was an eye-opening experience tech-wise and great for meeting old and existing colleagues for some networking. Brussels is the perfect setting for it as well - well connected and just the right European vibes. Great food and drinks too…

Will return next year.